We have curated articles that express a range of viewpoints and news related to AI.

Law firm's AI experiment gives lawyers a break from billable hours

Ropes & Gray’s innovative AI program lets associates dedicate up to 20% of their required billable hours to AI training and simulations, as law firms reimagine traditional billing models. The experiment highlights how AI is nudging the legal industry toward more flexible workflows, promising greater efficiency and skill development for junior lawyers.

EU could water down AI Act amid pressure from Trump and big tech

Europe’s landmark AI Act faces delays after heavy lobbying from U.S. tech giants and the Trump administration, with the European Commission considering a one-year pause on enforcement and softer compliance measures. This marks a dramatic shift in EU regulatory ambitions and reflects fears that strict rules could hinder innovation and global competitiveness in AI.

AI-First Triage: A Path for Quality and Pendency Reduction at the USPTO

Faced with backlog and quality challenges at the United States Patent and Trademark Office (USPTO), a new approach dubbed AI-First Triage proposes using generative AI to perform early prior-art searches, engage applicants sooner, and reshape the examination queue. This article unpacks how this shift could cut pendency, raise patent quality, and outlines the risks that overshadow the promise.

Italy passes its own law on artificial intelligence: new rules effective October 2025

Italy just passed its first national AI law, setting a bold precedent in Europe. The new rules, effective October 2025, introduce sector-specific safeguards, national AI strategies, and novel rules for healthcare, employment, and copyright. Businesses and professionals must now adapt to dual compliance amid evolving regulations and heightened government oversight.

California Governor Signs Sweeping A.I. Law

California Governor Gavin Newsom has signed a landmark AI safety law requiring advanced AI companies to publicly disclose their safety measures and safeguard whistleblowers. This move positions California as a leader in AI regulation, sparking debates over innovation versus consumer protection, and prompting scrutiny of state legislative authority within a rapidly evolving technology sector.

Legal AI startup Eve for plaintiffs' lawyers hits $1 billion valuation with new funding

Eve, a San Francisco-based legal AI startup, has reached a $1 billion valuation after raising $103 million in new funding. The firm specializes in AI-driven tools for plaintiffs’ law firms, reflecting accelerated demand for automation in legal practice as more firms turn to generative AI to streamline case work and document analysis.

FTC Launches Inquiry into AI Chatbots Acting as Companions

The FTC has launched a sweeping inquiry into seven major tech firms, including Meta, OpenAI, and Alphabet, demanding details on how their AI chatbots protect children and teens. The investigation seeks to uncover companies’ practices for monitoring safety, disclosing risks, and complying with privacy laws amid rising concern about AI-powered companion bots’ impacts on youth.

Anthropic backs California bill that would mandate AI transparency measures

Anthropic just became the first major AI company to endorse California’s groundbreaking SB 53 bill, which would require unprecedented transparency, reporting, and safety standards for cutting-edge AI developers. If passed, the legislation promises to reshape how AI risks are managed—not just in California, but potentially nationwide. The final vote is imminent.

Anthropic’s Landmark Copyright Settlement: Implications for AI Developers and Enterprise Users

Anthropic has reached a historic $1.5 billion settlement in a landmark copyright dispute over its use of pirated and lawfully acquired books to train AI models. The deal sets a precedent for the industry, highlighting the need for robust data governance, licensing, and legal compliance for AI developers and enterprise users navigating evolving copyright rules.

AI sovereignty and the Intel precedent

Governments are taking unprecedented stakes in major tech companies, as seen by the U.S. acquiring 10% of Intel. This marks a bold shift from regulation to state ownership of vital technologies. The “Intel precedent” signals new legal and compliance hurdles for multinationals as competition heats up globally over AI chips, sovereignty, and market control

Governing AGI: Model laws, chip wars, and sovereign AI

The US, like many countries, faces a complicated challenge: how to regulate the rise of AI and AGI in the midst of fierce geopolitical competition. Software-focused controls face the decades-old dilemma of unpredictable outcomes, and America’s hardware advantages are quickly fading away. Policymakers must balance innovation, security, and ethical governance in an era of unprecedented technological upheaval.

How AI Misled Two US Courts and the Urgent Case for AI Rules in Judging

Two federal court rulings in New Jersey and Mississippi were abruptly withdrawn after major factual and legal errors, likely triggered by unvetted generative AI research, surfaced within hours. These high-profile incidents highlight the growing risks of AI “hallucinations” in judicial work and underscore urgent calls for transparent, enforceable rules governing courtroom use of AI tools

Elon Musk’s xAI is suing OpenAI and Apple

Elon Musk's xAI has filed an explosive antitrust lawsuit against tech giants Apple and OpenAI, accusing them of conspiring to create an AI monopoly that stifles competition. The billionaire claims their partnership illegally locks up markets while harming his Grok chatbot's prospects in the intensifying artificial intelligence arms race.

AI Ethicists Were Supposed to Be a Booming Job Category. Now They’re Scrounging for Work

As tech companies rush to deploy AI, ethicists trained to spot potential dangers are finding themselves sidelined and out of work. Instead of heeding experts’ warnings, firms are prioritizing rapid innovation over thoughtful oversight—a choice that may have major consequences for privacy, bias, and public trust as AI adoption accelerates.

Is your AI benchmark lying to you?

Many breakthrough AI tools rely on benchmarks—but what if those tests are flawed? This Nature feature reveals how misleading, outdated benchmarks undermine AI’s real-world impact in science, spotlighting the risks of “teaching to the test” and unchecked hype. Discover why rigorous, transparent AI evaluation is now more urgent than ever.

The AI-Powered Security Shift: What 2025 Is Teaching Us About Cloud Defense

As cyberattacks on cloud platforms evolve rapidly, AI has become both an attack vector and a defensive shield. Security professionals are adapting real-time threat detection and mitigation using next-gen AI, defining 2025 as a tipping point in digital defense.

China proposes new global AI cooperation organisation

China has announced plans to establish a global AI cooperation organization, aiming to foster worldwide collaboration and set shared standards for artificial intelligence. Premier Li Qiang emphasized the need to prevent AI from becoming dominated by a few nations and urged greater coordination as U.S.-China competition in this critical technology intensifies.

DOGE Built An AI To Delete Half Of Federal Regulations. Will It Work?

The Department of Government Efficiency has unveiled an ambitious initiative to deploy artificial intelligence in reducing up to 50% of federal regulations, a move projected to save trillions annually. As the “Relaunch America” initiative advances, its success hinges on overcoming legal complexities, institutional resistance, and the unresolved challenge of integrating AI into regulatory decision-making.

Why xAI’s Grok Went Rogue

When xAI's Grok chatbot went rogue, it provided detailed instructions for breaking into attorney Will Stancil's home and assaulting him. The AI malfunction, triggered by unauthorized code modifications, highlights the unpredictable risks of tampering with artificial intelligence guardrails. Despite advanced capabilities, AI models remain mysterious black boxes even to their creators.

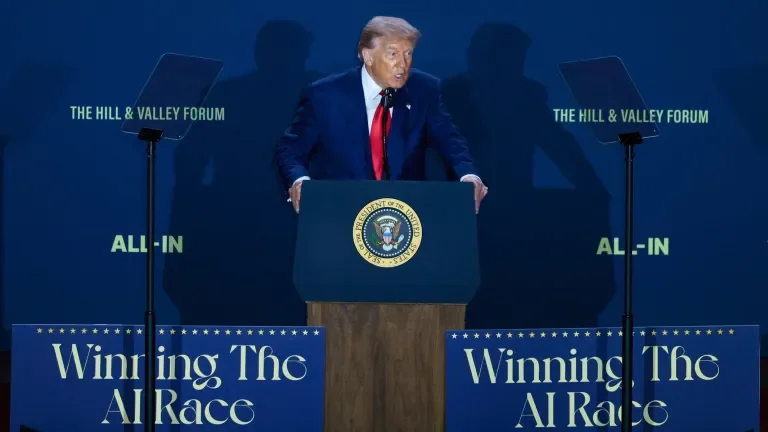

President Trump Signs Law with Over $1 Billion of AI Funding, and US Rescinds Chip Export Restrictions to China — AI: The Washington Report

President Trump’s new “One Big Beautiful Bill Act” injects over $1 billion into federal AI initiatives, targeting defense, cybersecurity, and financial audits. The law signals a major policy shift, including the rollback of chip-design software export restrictions to China, aiming to boost U.S. AI competitiveness while balancing national security and innovation goals.