AI Transparency and “Black Box Paradox”

The debate over AI transparency long predates formal frameworks such as the EU AI Act or the proposed America’s AI Action Plan. As AI systems keep growing, whether through generative AI or liquid neural networks, transparency becomes not only a technical challenge but also a policy imperative. Transparency has at its core the principle of explicability, which is the ability to make comprehensible the internal technical processes of an AI system and the clarification of how these outputs influence human decision-making.1

This principle refers to a comprehensive understanding of an AI system in every one of its aspects (data, models, algorithms, etc.) and the examination of its complete analysis, including decisions. An AI system operates through its artificial neurons. Unlike human reasoning, which involves conscious and interpretable steps, artificial neurons rely on statistical optimization processes that do not produce a transparent logic or rationale behind each decision. This difference leads to the black box problem.

The black box problem can be defined as an inability to fully understand the decision-making process of an AI, and consequently, the inability to predict decisions. This lack of transparency depends on how complex the model is. Certain “low” black boxes can be opened to understand certain elements and become “grey”.2 Nonetheless, black box models generally lead to a total opacity that makes post hoc interpretability of the algorithm impossible,3 and they cannot even be analyzed by reverse engineering.

We cannot understand what determining information is used during the process of decision-making. Despite the black box paradox, which makes it impossible to interpret and explain the decisions of some AI, the current legislation continues to promote transparency. The idea of opening the black box has even been identified as one of the major challenges of the digital economy by the Villani report.4 This desire for transparency could present a risk of circumscribing innovation and consolidating the monopoly of AI companies. Such regulation would be problematic for several reasons.

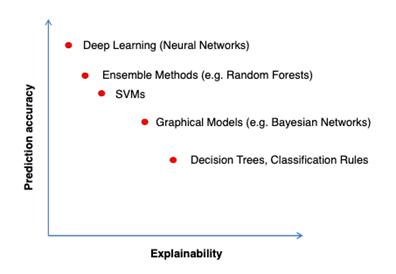

The graph illustrates the issue of conciliation between transparency (explainability) and performance (prediction accuracy).

Source: H. Dam, T. Tran, A. Ghose, Explainable Software Analytics, 2018.

First, it is tempting for legislators to promote legislation advocating AI transparency. But transparency is a technological problem. Artificial intelligence cannot be regulated as a single entity or an immutable concept. AI encompasses a wide range of architectures that evolve quickly and differ in interpretability, and its regulation is inevitably intertwined with other topics (computer science, philosophy, economics, etc.).

Moreover, it is not certain that machine learning will become more controllable if it is transparent. It is even likely that the more artificial intelligence evolves, the greater its opacity will be.5 If this is the trajectory of AI, then it makes little sense to impose regulations requiring minimum levels of transparency.6 Algorithms may never achieve the levels of interpretability desired by governments and other think tanks.

To a lesser extent, this concern can be illustrated through an economic lens with the example of the Laffer Curve. The Laffer Curve formalizes the idea that tax revenues decline when tax rates become too high, because economic agents will circumvent tax rules. A similar scenario may arise if the requirements of transparency are too burdensome. Companies will deliberately bypass regulatory requirements because the legislation imposes excessive constraints.

In addition, a high level of transparency would impose significant costs on new entrants to AI technology.7 Considering that artificial intelligence is concentrated in the hands of a dozen companies,8 imposing a transparency compliance cost on new companies or start-ups would be an additional step for those companies, which would favor an anti-competitive concentration.

On the other hand, the idea of AI transparency is not necessarily bad for innovation. It is simply preferable that the degree of transparency attributable to AI be reasonable and not standardized in a set of mandatory regulatory standards. The purpose of such regulation would either limit innovation or indirectly promote anticompetitive concentration by blocking new entrants to the AI market. It is therefore more appropriate to regulate the transparency of AI at reasonable levels as a compromise.

Transparency should not be appreciated as a binding and regulatory principle, but—on the contrary—as a function of trust for users to improve explicability, security, and standardization.

The case of certification is potentially interesting to guarantee the security of individuals in the face of algorithmic decisions. The Cybersecurity Act is a European regulation that has defined a European framework for cybersecurity certification.9 The certificate must be able to identify system vulnerabilities and their potential impacts. This means taking into consideration the data, the potential causes of system failure, as well as all the data that has not been used in the formation phase of the algorithmic decision. This theory of implementing mandatory certification for artificial intelligence would make it possible not to limit the technical progress of AI and to guarantee users that the system in question complies with European cybersecurity provisions. The black box would not be open, but the entire program (everything around the black box) would be considered secure and compliant with international legal and ethical standards.

Alternatively, the Villani report10 also mentions the development of AI auditing, which would be carried out by a body of experts and based on a test of fairness and loyalty. However, the audit faces the problem of the black box, because if it is impossible to determine the reasoning behind the program and the dispute results from the black box, then the audit will not be able to find anything.

The expectations on AI transparency are high given the rapid evolution of the technology, especially with the rise of AI-powered innovations. Nonetheless, it is crucial to recognize the limits of current algorithms that cannot be fully explained. Instead of aiming for total interpretability, alternative approaches such as soft-law instruments and potential certification could be encouraged to promote an AI that is trusted, transparent, and performant.

1 High-Level Expert Group on Artificial Intelligence, “Ethics guidelines for trustworthy AI” April 8, 2019. p.18.

2 I. BUDOR, "Explicabilité de l’Intelligence Artificielle – un défi majeur pour une adoption durable”, CapGemini. May 29, 2020.

3 R. GUIDOTTI, A. MONREALE, S. RUGGIERI, F. TURINI, F. GIANNOTTI, D. PEDRESCHI, "A survey of methods for explaining black box models", ACM computing surveys (CSUR), p.93. 2019.

4 C. VILLANI, “Donner un sens à l’intelligence artificielle pour une stratégie nationale et européenne” 1st version. p. 140. March 28, 2018.

5 I. KOMARGODSKI, M. NAOR et E. YOGEV, "White Box vs. Black-Box Complexity of Search Problems", IEEE 58th Annual Symposium on Foundations of Computer Science (FOCS), Weimann Institute of Science, 2017.

6 Y. BATHAEE, "The Artificial Intelligence Black Box and the failure of Intent and Causation", Harvard Journal of Law & Technology, Volume 31, Number 2, 2018.

7 C. S. SPATT, "Complexity of Regulation", Harvard Business Law Review, June 16, 2012.

8 "The Magnificent Seven," or Alphabet, Amazon, Apple, Meta Platforms, Microsoft, Nvidia, and Tesla.

9 European Parliament and Council, Regulation (EU), Cybersecurity Act, 2019/881, April 17, 2019.

10 C. VILLANI, “Donner un sens à l’intelligence artificielle pour une stratégie nationale et européenne” 1st version. p.144. March 28, 2018.

Samy Jacoubi is a legal specialist and researcher in AI policy with a background from UCLA Law and cross-border experience in the US and EU. He previously worked on JusPublica AI, an initiative focused on streamlining legal processes in the public sector, and authored a thesis on AI regulations.